Using Machine Learning to Forecast the Weather and Climate

An Overview of Three CCAI Tutorials on Forecasting

Intro

- Climate change has enormous implications for extreme events and hazardous weather.

- ML offers unprecedented potential to predict such events and thus adapt to and mitigate their effects.

- Our three forecasting tutorials illustrate end-to-end pipelines that use ML tools to predict extremes and climate variability.

As I flew across the eastern coast of Canada in mid-June 2023, it was impossible to overlook the hazy smoke clouds caused by the ongoing wildfires. As a climate scientist, it’s hard to ignore the link between these fires and the massive carbon emissions we humans release into the atmosphere each year - a staggering 37+ billion tons of CO2! While this gas remains invisible to the human eye, the cumulative impact of depositing such enormous quantities of it into the atmosphere doesn’t; across time and space, climate change is now materializing into phenomena such as the rapid spread of flames, heat waves, air pollution, and even alterations to planetary-scale climate modulators such as El Niño.

Using forecasting to adapt to the present weather conditions is a vitally important undertaking. However, it’s also difficult due to the weather’s diverse spatial and temporal nature. Take, for example, wildfires. They are difficult to predict and model as it is hard to get accurate input data for such models. Wildfire models involve tiny spatial scales and require pin-point accurate atmospheric data (such as wind, which would transport the fire) and fuel measurement.

Traditionally, for wildfires, or similar short-range extreme weather events, we use a predictive framework tailored to short time scales. However for climate we use a different prediction framework to capture the long-term time scales and extensive spatial coverage. Yet weather and climate are intrinsically linked and influence each other.

Efforts to use a modeling framework where both weather and cliamte are represented in one system started in the ’90s with the idea of “seamless prediction” (Shuka, 1998). Seamless prediction uses a single framework for forecasting across a range of timescales from days to years and represents one of the ultimate aims for weather and climate prediction. Realizing that aim requires finer and finer spatial and temporal resolutions calculated over long time periods, which translates into a prodigious computational load. This presents a problem as while our computers have gotten bigger, they haven’t necessarily gotten faster. With the limits of current computation, our climate models are suited to short-term, high-resolution simulations. Hundreds of years of 1km or less spatial resolution is impossible without substantial computational costs.

Machine Learning (ML) methodologies and accelerated computing harnessing GPU technology offer an unprecedented potential to provide faster, accurate, long-term predictions as an alternative or a complement to traditional, model-based methods (more about this here).

Our series of forecasting tutorials illustrate how to produce such fast and accurate predictions for three different types of phenomena spanning different temporal and spatial scales: (1) El Niño, (2) synoptic scale weather, and (3) extreme events at the edge of synoptic scale.

The ML methodology behind all three tutorials is Convolutional Neural Networks (CNNs). Three spatial and temporal scales are addressed with one methodology suggesting that we are moving closer to a seamless prediction framework.

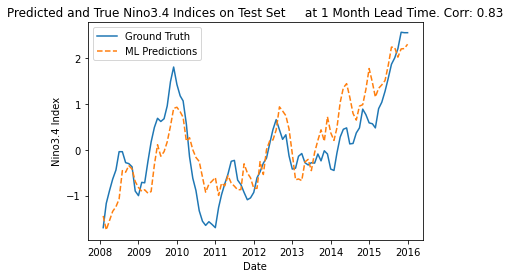

Forecasting the El Niño Southern Oscillation (ENSO) with Machine Learning

The El Niño prediction tutorial walks the users through a hierarchy of ML-based predictions on an indicator of ENSO from simple (regression-based model) to complex (deep learning, CCN-based) models. Dynamical models forecast El Niño by modeling the physics of the atmosphere and ocean (Cane et al. 1986) while ML methodologies mostly use data produced by long climate model simulations, and in some cases observations for the prediction. CNNs have successfully predicted El Niño with superior skill to the dynamical models which are typically used (Ham et al. 2019). The ENSO tutorial walks the student through multiple statistical tools to predict ENSO with discussion questions that invite the student to think and adapt the tool to their own need.

One of the current challenges in ML is how to constrain deep learning approaches such as CNN - which are based on learning from large amounts of data - by incorporating the laws of physics into the model. Incorporating physics can provide models with structure to facilitate learning as well as provide interpretability of performance. The El Niño prediction tutorial raises this problem along with pointing out the importance of the physical interpretability of the model, prediction, and results. Physical interpretability e.g. understanding what our model is doing under the hood, how the model is making its predictions, and the physical considerations to make a certain prediction (and not make it differently) all represent a cutting-edge research area for both climate science and ML.

FourCastNet: A practical introduction to a state-of-the-art deep learning global weather emulator from Pathak et al., 2022

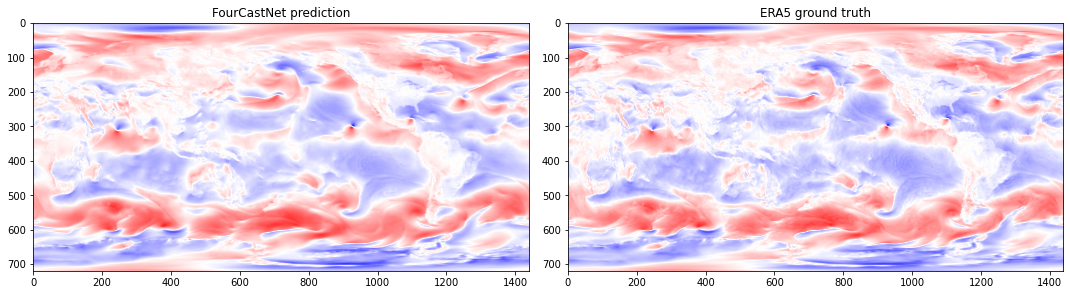

FourCastNet is a state-of-the-art deep learning-based surrogate for weather models, a tool that can be used to produce predictions without the computational cost of running the full weather model. FourCastNet is a CNN trained on ERA5, a reanalysis dataset consisting of hourly estimates for several atmospheric variables at a latitude and longitude resolution of 0.25 degrees and at elevations from the surface of the earth to roughly 100 km covering from January 1940 to the present.The model generates a week-long 25km resolution forecast in less than 2 seconds, orders of magnitude faster than global forecast systems (GFS). Beyond the day-to-day weather, FourCastNet can capture several different types of extreme events, such as hurricanes. FourCastNet has been used successfully to predict a very broad range of extreme events,for example Africa’s hottest recorded heatwave in July 2018 as well as the 2023 hurricane season.

Accurate, reliable, and efficient means of forecasting global weather patterns are of paramount importance to our ability to mitigate and adapt to climate change. While the training of the model is made a priori and theCNN weights are provided directly in the tutorial, the users are shown the evaluation pipeline to understand how they might train such a model.

FourCastNet is trained using significant computational resources (both in terms of input data and GPU time). This tutorial gives a rare view into such a model as by making the trained model weights, code, and datasets freely available. The availability of FourCastNet aids new research, for example by allowing the creation of rapid and inexpensive large-ensemble forecasts to complement and augment numerical weather predictions (NWP) based forecasts.

While FourCastNet performs rapid forecasts, there are a number of limitations. As with many ML-based methodologies, FourCastNet does not perform data assimilation with observations, relying on ERA5 to provide real-time initial conditions. The model is also a fully data-driven model without physics constraints. Additionally, the current resolution of 25 km is too coarse to capture finer scale structures of sub-grid processes. Lastly, FourCastNet is intended as a weather model and its behavior on climate time scales is not understood well. The authors however envision a coupling between a climate model output and FourCastNet in order to understand extreme weather in future climate scenarios for climate range timescales.

ClimateLearn: Machine Learning for Predicting Weather and Climate Extremes from Nguyen et al. 2023

The extreme temperatures and precipitation in the summer of 2023 have made clear the impact that extreme weather can have. Fundamental to climate change mitigation and adaptation is (1) understanding the changing likelihood of extreme conditions in long-term observational records and (2) exploring the frequency and intensity with which such extremes might occur, especially at regional scales.

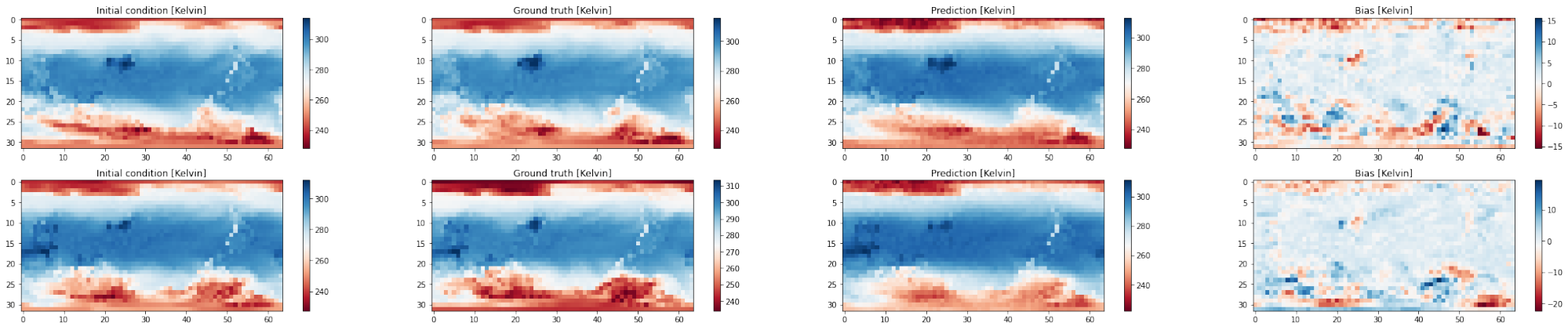

The last tutorial in the series, “Machine learning for climate extremes”, has two components: (1) a prognostic component where extremes are forecasted at a coarse resolution and (2) a mapping of the coarser resolution to a finer one. Downscaling – mapping coarse resolution data to finer resolutions - aims to bridge the gap between large-scale, dynamical models and the end users who need localized forecasts and projections. Using ML, the tutorial combines temporal forecasting and statistical downscaling, providing a model which makes reasonably accurate predictions in most parts of the globe, except the polar regions.

The visualization shows that the model makes reasonably accurate predictions in most parts of the globe, and the prediction well correlates with the ground truth. The model seems to make large errors near the two poles, where the temperature is more unpredictable.

Conclusions and Summary

Through our series of Colab-based tutorials, you will get the chance to learn hands-on how to use a set of ML-based models and large amounts of data to make informed weather or climate-related predictions within hours. While ML methodologies in atmospheric science have a long way to go, it is essential to note that these fast, accurate, and reliable predictions were almost impossible to envision even a few years ago, let alone be available to anyone with a good Wi-Fi connection. Moreover, such predictive tools go beyond the proof of concept phase and can be used operationally (e.g., FourCastNet).

From a scientific perspective, our tutorials offer the potential for the users to make not only predictions but also pursue climate-informed projects; each of these tutorials in itself can play a threefold role:

- they can be an end-to-end data analysis project spanning different temporal and spatial phenomena in weather or climate, while still providing a foundation for forecasting other phenomena;

- all three tutorials represent machine learning-based tools ready to be used operationally and tuned for various applications the user might have in mind; and

- all these three tutorials can be seen as a tool to tackle deep fundamental scientific questions in climate and weather prediction including, for example, tuning such tools for a seamless prediction framework or new phenomena (e.g. wildfire prediction).

As a complete data analysis pipeline, our projects start with the first APIs for accessing popular repositories that host global climate data and walk you through all the analysis steps, ending with visualization packages for plotting the results. The projects also lead the students through ML architecture considerations, for example, hyperparameters tunning for each type of phenomenon, looking into the right optimization metrics for the right problem, evaluating model performance, and much more.

Ultimately, these three tutorials represent a multi-faceted view and a hands-on way of exploring the rapidly changing boundaries of forecasting the behavior of a changing climate through AI. We increasingly face climate manifestations such as heatwaves, extreme El Niños, or forest fires, tools like the ones described in these tutorials and the unprecedented amount of data we possess are an important part of adapting and thriving to our changing climate.

Cane, M. A., Zebiak, S. E. and Dolan, S. C. (1986). Experimental forecasts of El Niño. Nature, 115(10), 2262-2278.

Ham, Yoo-Geun, Jeong-Hwan Kim, and Jing-Jia Luo. “Deep learning for multi-year ENSO forecasts.” Nature 573.7775 (2019): 568-572.

Shukla, J., 1998: Predictability in the midst of chaos: A scientific basis for climate forecasting. Science, 282, 728-731.