Using Reinforcement Learning to Improve Energy Management for Grid-Interactive Buildings

Implementation in the Citylearn environment and key challenges for multi-agent reinforcement learning

Buildings consume a significant amount of global energy and contribute to greenhouse gas emissions (around 19% in 2010), but also have the potential to reduce their carbon footprint by 50-90%. Optimal building decarbonization requires electrification of end-uses and integration of renewable energy systems. This integration requires aligning availability of renewable energy with the energy demand, and must be carefully managed during operation to ensure reliability and stability of the grid.

Demand response (DR) is an energy-management strategy that allows consumers and prosumers to provide grid flexibility by reducing energy consumption, shifting energy use, or generating and storing energy. Buildings and communities that offer such two-way interaction with the grid are often called grid-interactive buildings or communities.

Advanced control systems can automate the operation of energy systems, but effective DR requires intelligent load control. Advanced control algorithms such as Model Predictive Control (MPC) and Reinforcement Learning (RL) have been proposed for such applications. RL is a type of machine learning that involves learning through trial and error and can take advantage of real-time and historical data to provide adaptive DR capabilities.

A major challenge for RL in DR is to compare algorithm performance, which requires a shared collection of representative environments to systematically compare building optimization algorithms. More specifically, in the context of building and HVAC (heating, ventilation, and air conditioning) systems, there are nine challenges that need to be addressed in order to make those environments practical for real-world applications. Inspired by Durlac-Arnold et al. we have summarized them in a recent paper as follows:

- Being able to learn on live systems from limited samples

- Dealing with unknown and potentially large delays in the system actuators, sensors or feedback

- Learning and acting in high-dimensional state and action spaces

- Reasoning about system constraints that should never or rarely be violated

- Interacting with systems that are partially observable

- Learning from multiple, or poorly specified, objective functions

- Being able to provide actions quickly, especially for systems with low latencies

- Training off-line from fixed logs of an external policy#

- Providing system operators with explainable policies

In this post we show an example for Challenge 8.

Training off-line from fixed logs of an external policy

Challenge 8 is particularly important because in buildings there is typically a rule-based controller (RBC) available. An RBC is a deterministic policy, in essence a sequence of if-then-else rules that the controller executes. If historical data of the RBC policy (fixed logs of an external policy) is available, it could be used to jump-start a model-free RL controller, thereby reducing its learning time.

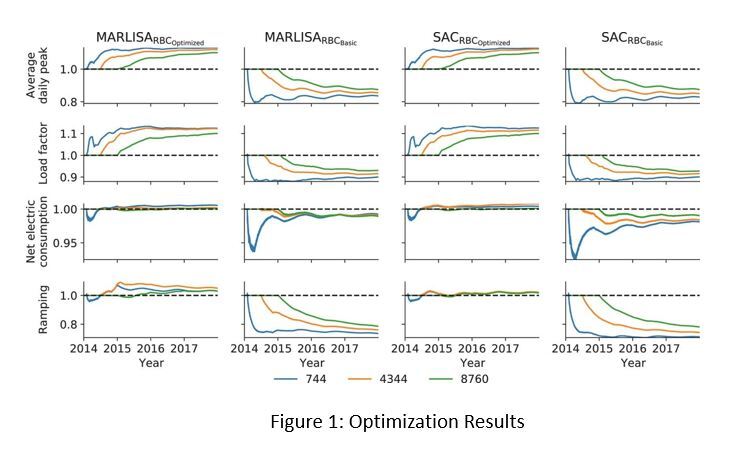

To investigate this challenge, we benchmarked some RL configurations in CityLearn, an OpenAI Gym environment for energy management in grid-interactive smart communities, using the dataset of the CityLearn Challenge 2021. This data consists of the energy usage of one medium office, one fast-food restaurant, one retail, one strip mall retail, and five medium multi-family homes in Austin TX. Data for a four-year period is available. We compared independent RL agents (using Soft-Actor-Critic or SAC) and coordinating RL agents (MARLISA algorithm) to a industry best practice (default settings) and an optimized (based on grid search) RBC . The agents have been pre-trained for one month (744 hours), six months (4344 hrs) or one year (8760 hrs) with either the basic or the optimized RBC. During the training period the building is operated with the respective RBC, and after the training period the RL algorithms are switched on. We then compare their performance across a set of key performance indicators (KPIs) in the following figure:

We show the result of each agent (MARLISA or SAC) normalized (taking as a reference the performance of the basic or the optimized RBC). One can observe that longer training periods or more historic data do not necessarily improve the results as all curves eventually end up very close together in each experiment. This suggests that little training data from a basic RBC is sufficient to jump start RL controllers. Another relevant insight is that both RL agents (SAC and MARLISA) improve the performance of the basic RBC (curves drop below 1), which is expected. However, if the RBC is sufficiently calibrated, i.e., the optimized RBC, both RL agents are not outperforming the RBC (curves go above 1 or baseline). This shows that the quality of the RBC or the available offline policy significantly impacts the results, more so than the available data itself.

Another challenge not listed before is the lack of a common benchmark environment to evaluate SOTA (state of the art) models. In our context, we can use standardized environments such as BOPTEST, ACTB, CityLearn or Sinergym. A common benchmark environment could trigger the same excitement and progress in the field as ImageNet did for deep learning. If you’re interested in learning more, there is an online tutorial to go along with slides presented at ICLR as well as a new community authored paper.

As we have shown in the blogpost, the use of RL can be a very powerful tool, particularly for suboptimal RBCs, without large training times and data sets. However, for optimized RBCs RL does not seem to provide benefits. Standardized environments like CityLearn overcome two of the main challenges of the field, which is the need for offline training data and common environments to define SOTA models in the field of RL like we have for computer vision.

To make the most of this, researchers in both building engineering and computer science should work together to transfer theoretical findings and new ideas into practical solutions for building energy management.